Sunday, February 21, 2010

Tuesday, February 16, 2010

Extrapolating control node data from a point cloud

Here's an idea. If one can build skeletons for motion capture markers, then wouldn't it be possible to extrapolate master control node data during a head/face motion capture session? Say you have your actor with markers on their face (I need to find out the brand of reflective stickers used by Creaform's 3d scanners, because these would work very well on small surface areas such as faces and hands). You capture a snapshot of the actor's face. The captured markers become the "point cloud". Using either Vicon or some other program, you calculate the center of the captured markers/points. Then, you construct a "skeleton" for the cloud by parenting every marker back to a node at the calculated center (which acts like the skeleton root). And there you have it: a way of simultaneously obtaining detailed face deformations (which can be applied to a base template in the form of keyframed vertex movements - what Cat is working on) and master control node position/orientation data over time (which can be used in my proposed GUI setup). All in a single mocap session.

Taming of the OpenMesh

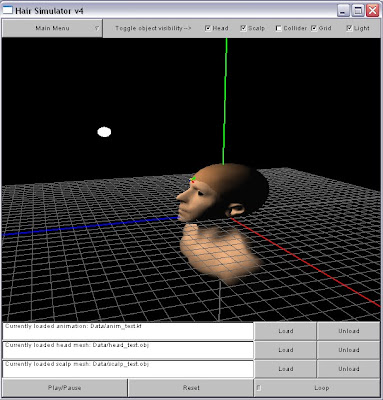

Goal: Add the ability to render/draw the original input head and scalp OBJs (perhaps store the geometry data in an OpenMesh data structure)

(a decimated test .obj to represent the input head - no collider just yet)

(a decimated test .obj to represent the input head - no collider just yet)

(the original .obj that Cat gave me...I would have to scale the program controls to accommodate its larger size...perhaps it would be a good idea to have this done automatically based on the mesh's bounding volume...)

(the original .obj that Cat gave me...I would have to scale the program controls to accommodate its larger size...perhaps it would be a good idea to have this done automatically based on the mesh's bounding volume...)

Unfortunately, the other goals still need work...but I am proud that I figured out how to use the OpenMesh library (remember that pesky error from way, way back? I somehow managed to bypass it). Over the next several weeks, I am going to be presenting and implementing the Zinke paper ("Dual Scattering Approximation for Fast Multiple Scattering in Hair") for CIS660. The work from these assignments will feed right into the rendering portion of my hair simulator. It's going to be an offline process (slow approach) at first, but hopefully I can take the procedure used in my project code and give it the "senior project treatment" by porting it to the GPU. But, we know how many times I've said "GPU" before, don't we...speaking of which, I did receive some feedback on the Bullet forums as to where I was going wrong with enabling simulation on the GPU. However, the person who apparently discovered a solution has not contacted me yet. I will bug them again.

(a decimated test .obj to represent the input head - no collider just yet)

(a decimated test .obj to represent the input head - no collider just yet) (the original .obj that Cat gave me...I would have to scale the program controls to accommodate its larger size...perhaps it would be a good idea to have this done automatically based on the mesh's bounding volume...)

(the original .obj that Cat gave me...I would have to scale the program controls to accommodate its larger size...perhaps it would be a good idea to have this done automatically based on the mesh's bounding volume...)Friday, February 5, 2010

New and Accomplished Goals

Goal: Generate key frame data that specifies the position and orientation of a "master control node" at each frame; save this data in a file

Goal: Parse the .kf file and store its data in a dictionary whose keys are frame numbers and values are position/orientation vectors

Well, I didn't end up using a dictionary data structure. Vectors with index values corresponding to keyframe numbers work just fine (negative keyframes are also possible in theory; my parser uses the start and end frames of the KF to calculate the offset needed to extrapolate vertex index numbers from keyframe numbers and vice versa).

Goal: Develop a simple GUI that allows the user to change the position/orientation of a rendered master control node according to the parsed .kf data (i.e. "play" a .kf animation)

I also accomplished this one. Check out the videos below to see the comparison between the test animation being played in Maya and test.kf being played using my GUI. To play back animations at the correct framerate (barring some other big process slowing my computer down, which might happen when the sim is brought over), I used a timer class that measures system ticks. I did not write this class (I found it here), but it uses common functions provided by both Windows and Linux OSes (I'm trying to keep things as platform independent as I can; as far as I know, FLTK/GLUT is platform independent; the only thing that isn't is my VS project file).

(first with Maya - I had to loop the animation manually, since letting Maya do the looping made the animation a little crazy)

(and here's my version - the messing around in the beginning is to show off the scene camera)

I have indeed accomplished this goal. I made a simple animation in Maya (only 16 frames long) and copied over the keyed position and orientation values by hand into a "keyframe" file (or KF). The KF file is a format that I devised using the OBJ format as inspiration. As far as I know, there are a couple other data formats that also have the .kf extension, but none of them seem to be extremely popular (I could always change the extension name to something else - it's just a renamed .txt file; that's all). Here is the test.kf that I created based on the Maya animation. To enter my project pipeline, a person would have to construct a converter to take their animation format and convert it into the KF format. This would not be very difficult, considering the simplicity of the KF. Hopefully the fact that it uses Euler angles won't be a problem.

(click to enlarge)

Goal: Parse the .kf file and store its data in a dictionary whose keys are frame numbers and values are position/orientation vectors

Well, I didn't end up using a dictionary data structure. Vectors with index values corresponding to keyframe numbers work just fine (negative keyframes are also possible in theory; my parser uses the start and end frames of the KF to calculate the offset needed to extrapolate vertex index numbers from keyframe numbers and vice versa).

Goal: Develop a simple GUI that allows the user to change the position/orientation of a rendered master control node according to the parsed .kf data (i.e. "play" a .kf animation)

I also accomplished this one. Check out the videos below to see the comparison between the test animation being played in Maya and test.kf being played using my GUI. To play back animations at the correct framerate (barring some other big process slowing my computer down, which might happen when the sim is brought over), I used a timer class that measures system ticks. I did not write this class (I found it here), but it uses common functions provided by both Windows and Linux OSes (I'm trying to keep things as platform independent as I can; as far as I know, FLTK/GLUT is platform independent; the only thing that isn't is my VS project file).

(first with Maya - I had to loop the animation manually, since letting Maya do the looping made the animation a little crazy)

(and here's my version - the messing around in the beginning is to show off the scene camera)

Since the new GUI is hard to see clearly in the video, here's a larger screenshot:

Goal: Plug Bullet into the GUI and use control laws to extrapolate the linear and angular velocity (or force) necessary to move the head rigid body so that its position/orientation matches that of the control node

I have not yet accomplished this one. But I will keep working towards it! Here's how I plan on breaking it down:

I have not yet accomplished this one. But I will keep working towards it! Here's how I plan on breaking it down:

- Insert all the necessary dependencies and linker definitions for Bullet into my VS project

- Create a class that asks Bullet for the vertices or faces of the head rigid body shape at each frame and uses this information to draw the shape (perhaps store the geometry data in an OpenMesh data structure) - I'll need to sift through the Bullet demo application code to find where/how scene objects are being drawn (maybe all I need to do is copy over a couple functions)

- Create a class that takes a pointer to the control node as input and generates force/torque values (using feedback controls), which it then applies to the Bullet head - if force and torque can't generate "instantaneous" control over the rigid body head, then I'll need to figure out how to generate velocity values instead

- Finish the remaining goal specified above

- Add the ability to render/draw the original input head and scalp OBJs (perhaps store the geometry data in an OpenMesh data structure)

- Add the ability to render the Bullet soft body key hairs as lines

- Read papers (and dissect tutorials) pertaining to writing shaders - I can't seem to make up my mind when it comes to which method of rendering I want to implement; hopefully more research will help me choose

Tuesday, February 2, 2010

Approach the Shaders with Caution

I have never written a shader before, so the rendering part of this project has me a little on edge. But then I found this tutorial (kind of outdated, but still decent) about how to generate fur shaders using DirectX Effect files (.fx). I was happy to get some of the tutorial code to compile and run. Now I have several resources showing me how to plug shaders into a framework. In fact, that's what confuses me most about shaders; I'm not sure how they're supposed to fit into my current code base. Since I am using OpenGL to render things in my scene at the moment, I'm guessing I should look into (O)GLSL. This tutorial looks like it might be helpful (now if I could just get the example code to compile on my computer...).

If I had a penny for every GUI I made...

Concept:

Currently, we have:

Alas, it is just a skeleton GUI at the moment. All it can do is hide and show items like the scene light and scene grid. There's the "control node" there too, which can be fed position and orientation data, but I still need to create the player class (which will feed KF data to the control node).

Alas, it is just a skeleton GUI at the moment. All it can do is hide and show items like the scene light and scene grid. There's the "control node" there too, which can be fed position and orientation data, but I still need to create the player class (which will feed KF data to the control node).

Let's put some meat on this skeleton! (...)

Currently, we have:

Alas, it is just a skeleton GUI at the moment. All it can do is hide and show items like the scene light and scene grid. There's the "control node" there too, which can be fed position and orientation data, but I still need to create the player class (which will feed KF data to the control node).

Alas, it is just a skeleton GUI at the moment. All it can do is hide and show items like the scene light and scene grid. There's the "control node" there too, which can be fed position and orientation data, but I still need to create the player class (which will feed KF data to the control node).Let's put some meat on this skeleton! (...)

Subscribe to:

Posts (Atom)