| Goal | Time Required for Completion | Priority (on a scale of 1 to 10) |

|---|---|---|

| Grow key hairs out of the scalp mesh according to a procedurally generated vector field (user will input the local axes of the scalp mesh) | 2 days | 8 |

| Adjust key hair soft body values until they behave more like real hairs | 1 day | 10 |

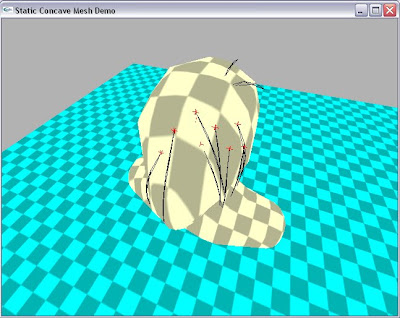

| Bring the Bullet code over to my OpenGL framework (i.e. render Bullet's physics bodies myself instead of letting Bullet's Demo Application class do everything) | 7 days | 10 |

| Tessellate the key hairs using B-splines | 14 days | 5 |

| Use interpolation ("multi strand interpolation") between key hairs to grow and simulate filler hairs | 14 days | 8 |

| Build strips (and clusters) around each control and filler hair | 5 days | 9 |

| Implement the Marschner reflectance model to render/color a polygonal strip of varying width | 21 days | 8 |

| Implement the Kajiya shading model to render/color a polygonal strip of varying width (based on results, choose the "better" method, or somehow combine the two) | 21 days | 7 |

| If the chosen model doesn't color wider strips realistically, modify the renderer so that it interprets 1 wide strip as X skinny strips (perhaps using an opacity/alpha map) | 14 days | 6 |

| Implement the (modified) chosen model to render/color a 3d polygonal cluster | 10 days | 8 |

| ... | ... | ... |

I'll continue to fill in and modify this list over the next week...I'm not really sure how things will be going for me by the time I get to the rendering part of the project, so it's difficult to plan that far ahead. :-/ I found a (semi) new resource for rendering, though - NVIDIA Real Time Hair SIGGRAPH Presentation. Unfortunately, this presentation is a bit discouraging in the sense that most of it showcases the latest GPU technology, which I can't tap into at the moment (nor do I think I ever could, since they mention Direct3D11! I only have 9...).